Google crawling and indexing optimization is the foundation of search engine visibility. If Google cannot efficiently crawl or index your website, your pages will never rank—no matter how strong the content is.

This in-depth guide explains how Googlebot works, how indexing decisions are made, and how to optimize your website structure, internal linking, and technical SEO for faster indexing and sustainable rankings. For broader SEO fundamentals and updates, explore the SEO resources available in the SEO category on SEO Traffic Hero.

What Is Google Crawling and Indexing?

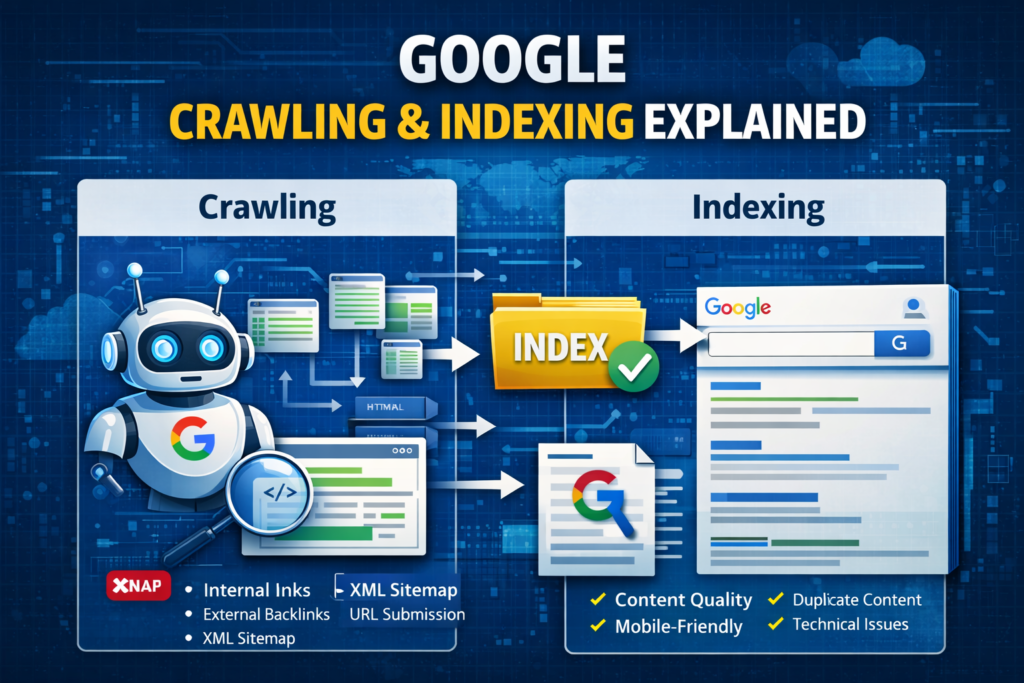

What Is Crawling?

Crawling is the process by which Googlebot discovers web pages. Googlebot follows internal links, external backlinks, and XML sitemaps to find new or updated content. When a page is crawled, Google analyzes its content, links, and technical elements.

What Is Indexing?

Indexing occurs after crawling. Google stores and organizes the information it collects so that pages can appear in search results. Pages that are crawled but not indexed are invisible in Google Search.

Modern SEO strategies increasingly focus on ensuring that only high-value pages are indexed. A deeper look at this evolution can be found in the article on authority site networks and modern SEO strategy for 2025.

How Google Crawls and Indexes Websites

Googlebot discovers websites through multiple signals, including:

- Internal links that connect related pages

- External backlinks from authoritative sites

- XML sitemaps submitted via Search Console

- Previously indexed URLs that are revisited

A clean site architecture and strong internal linking help Googlebot understand page relationships. The SEO Traffic Hero team applies these principles across all projects, which you can learn more about on the About Us page.

Why Google Crawling and Indexing Optimization Matters

Optimizing crawling and indexing ensures that:

- Important pages are discovered quickly

- Crawl budget is used efficiently

- Indexing delays are reduced

- Ranking potential is maximized

Without proper optimization, websites may suffer from index coverage issues or incomplete indexing. If you want to understand why technical SEO and structured optimization matter, see why businesses trust SEO Traffic Hero.

Common Crawling and Indexing Issues

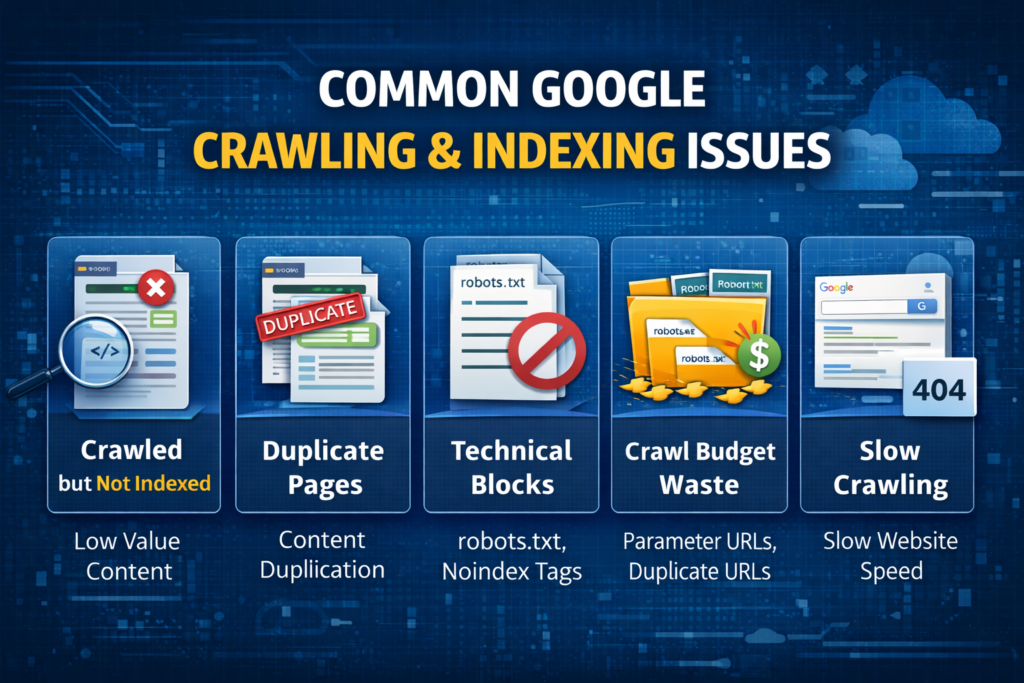

Crawled but Not Indexed Pages

This issue typically occurs when:

- Content is thin or duplicated

- Pages lack internal links

- Quality signals are weak

Crawl Budget Wastage

Crawl budget is often wasted on:

- Duplicate URLs

- Parameter-based pages

- Low-value or outdated content

Technical Barriers

Technical problems such as incorrect robots.txt rules, improper canonical tags, or accidental noindex directives can prevent indexing altogether.

Step-by-Step Google Crawling and Indexing Optimization Framework

Step 1: Optimize Site Architecture

A logical site structure improves crawl efficiency:

- Keep important pages within a few clicks from the homepage

- Use clear category hierarchies like those in the SEO category

- Avoid orphan pages

Step 2: Strengthen Internal Linking

Internal linking guides Googlebot and distributes crawl equity. Linking from authoritative pages—such as in-depth SEO strategy articles—to newer or priority pages helps improve indexation and visibility.

For example:

- Linking SEO strategy discussions to the homepage of SEO Traffic Hero

- Supporting content linked contextually from authority-based articles

Step 3: Submit and Maintain an XML Sitemap

An XML sitemap helps Google identify which pages should be crawled and indexed. Ensure your sitemap:

- Contains only indexable URLs

- Is updated automatically

- Is submitted in Google Search Console

Step 4: Optimize Crawl Budget

Effective crawl budget optimization includes:

- Blocking low-value URLs

- Consolidating duplicate content

- Reducing redirect chains

- Using canonical tags correctly

Step 5: Fix Index Coverage Issues

Regularly review the Index Coverage report to identify errors, excluded pages, and valid-but-not-indexed URLs. Addressing these issues improves overall indexing health.

Best Practices for Faster Google Indexing

- Publish original, high-quality content

- Add internal links immediately after publishing

- Ensure fast page load times

- Optimize for mobile-first indexing

- Request indexing for priority pages

Google Search Central documentation is a reliable authoritative reference for crawling and indexing best practices.

Advanced Crawling and Indexing Optimization Tips

Use Log File Analysis

Log file analysis reveals how Googlebot interacts with your site, which pages are crawled most often, and where crawl budget may be wasted.

Optimize JavaScript Rendering

Ensure essential content is accessible without heavy reliance on delayed JavaScript rendering. Server-side rendering can improve crawlability for complex websites.

Common SEO Mistakes to Avoid

- Blocking important pages accidentally

- Indexing thin or duplicate content

- Ignoring internal linking structure

- Overusing noindex tags

- Publishing content without crawl consideration

Avoiding these mistakes ensures better long-term SEO performance.

Real-World Use Cases

New Website Indexing

New websites benefit from strong internal linking from the homepage and core SEO resources, helping Googlebot discover content faster.

Large Website Crawl Management

Large websites must carefully manage crawl budget and indexation to ensure high-value pages receive priority, a principle emphasized in modern SEO strategies.

Frequently Asked Questions

What is Google crawling?

Google crawling is the process where Googlebot discovers and reads web pages by following links and sitemaps.

What is Google indexing?

Indexing is the process of storing crawled pages in Google’s database so they can appear in search results.

How long does Google take to index a page?

Indexing can take from hours to weeks, depending on site authority and crawl signals.

Why are pages crawled but not indexed?

This usually happens due to low-quality content, duplication, or weak internal linking.

Does internal linking help indexing?

Yes, internal links guide Googlebot to important pages and improve discoverability.

Google provides official documentation explaining how crawling and indexing work through Google Search Central.

Conclusion

Google crawling and indexing optimization is the backbone of technical SEO. By improving site architecture, internal linking, crawl budget usage, and technical health, you make it easier for Google to discover, understand, and index your content.

A well-optimized site supports faster indexing, stronger topical authority, and sustainable rankings—ensuring long-term success in Google Search.

Google crawling and indexing optimization ensures that your website is discoverable, indexable, and capable of ranking long-term in Google search results.